In 2004, the U.S. Department of Defense issued a challenge: $1 million to the first team of engineers to develop an autonomous vehicle to race across the Mojave Desert.

Though the prize went unclaimed, the challenge publicized an idea that once belonged to science fiction — the driverless car. It caught the attention of Google co-founders Sergey Brin and Larry Page, who convened a team of engineers to buy cars from dealership lots and retrofit them with off-the-shelf sensors.

But making the cars drive on their own wasn’t a simple task. At the time, the technology was new, leaving designers for Google’s Self-Driving Car Project without a lot of direction. YooJung Ahn, who joined the project in 2012, says it was a challenge to know where to start.

“We didn’t know what to do,” says Ahn, now the head of design for Waymo, the autonomous car company that was built from Google’s original project. “We were trying to figure it out, cutting holes and adding things.”

But over the past five years, advances in autonomous technology have made consecutive leaps. In 2015, Google completed its first driverless trip on a public road. Three years later, Waymo launched its Waymo One ride-hailing service to ferry Arizona passengers in self-driving minivans manned by humans. Last summer, the cars began picking up customers all on their own.

Still, the environmental challenges facing these autonomous cars are many: poor visibility, inclement weather and difficulty distinguishing a parked car from a pedestrian, to name a few. But designers like Ahn are trying to fix the problems, with high-tech sensors that reduce blind spots and help autonomous cars see, despite obstructions.

Eyes on the Road

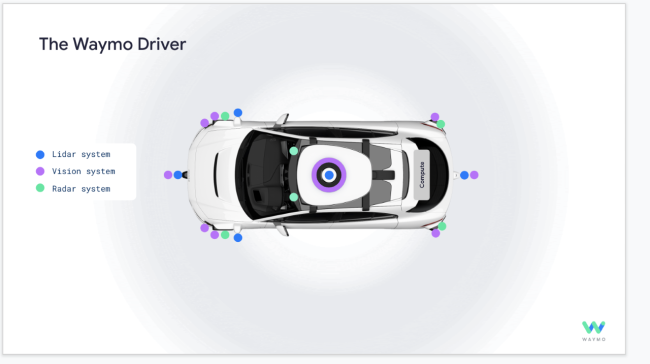

A driverless car understands its environment using three types of sensors: lidar, cameras and radar. Lidar creates a three-dimensional model of the streetscape. It helps the car judge the distance, size and direction of the objects around it by sending pulses of light and measuring how long it takes to return.

“Imagine you have a person 100 meters away and a full-size poster with a picture of a person 100 meters away,” Ahn says. “Cameras will see the same thing, but lidar can figure out whether it’s 3D or flat to determine if it’s a person or a picture.”

Cameras, meanwhile, provide the contrast and detail necessary for a car to read street signs and traffic lights. Radar sees through dust, rain, fog and snow to classify objects based on their velocity, distance and angle. The three types of sensors are compressed into a domelike structure atop Waymo’s latest cars and placed around the body of the vehicle to capture a full picture in real time, Ahn says. The sensors can detect an open car door a block away or gauge the direction a pedestrian is facing. These small but important cues allow the car to respond to sudden changes in its path.

The cars are also designed to see around other vehicles while on the road, using a 360-degree, bird’s-eye-view lidar that can see up to 300 meters away. Think of a U-Haul blocking traffic, for example. A self-driving car that can’t see past it might wait patiently for it to move, causing a jam. But Waymo’s latest sensors can detect vehicles coming in the opposite lane and decide whether it’s safe to circumvent the parked truck, Ahn says.

And new high-resolution radar is designed to spot a motorcyclist from several football fields away. Even in poor visibility conditions, the radar can see both static and moving objects, Ahn says. The ability to measure the speed of an approaching vehicle is helpful during maneuvers such as changing lanes.

Driverless Race

Waymo isn’t the only one in the race to develop reliable driverless cars fit for public roads. Uber, Aurora, Argo AI, and General Motors’ Cruise subsidiary have their own projects to bring self-driving cars to the road in significant numbers. Waymo’s new system cuts the cost of its sensors in half, which the company says will accelerate development and help it collaborate with more car manufacturers to put more test cars on the road.

However, challenges remain, as refining the software for fully autonomous vehicles is much more complex than building the cars themselves, says Marco Pavone, director of the Autonomous Systems Laboratory at Stanford University. Teaching a car to use humanlike discretion, such as judging when it’s safe to make a left-hand turn amid oncoming traffic, is more difficult than building the physical sensors it uses to see. Furthermore, he says long-range vision may be important when traveling in rural areas, but it is not especially advantageous in cities, where driverless cars are expected to be in highest demand.

“If the Earth were flat with no obstructions, that would potentially be useful,” Pavone says. “But it’s not as helpful in cities, where you are always bound to see just a few meters in front of you. It would be like having the eyes of an eagle but the brain of an insect.”

Source: www.discovermagazine.com

GIPHY App Key not set. Please check settings